Building a gatsbyjs CD pipeline with Github Actions & Digital Ocean

Following Netlify's acquisition of Gatsby Cloud in February 2023, resulting in the discontinuation of Gatsby Cloud's services, we were forced to find an alternative solution to host one of our customer’s website. Read the following text to dive deeper into our journey toward our CD pipeline utilizing GitHub Actions, Digital Ocean Spaces, and self-hosted GitHub Runners.

Intro

With the acquisition of Gatsby Cloud by Netlify in February 2023, Gatsby Cloud ended to provide its products and services. Consequently, our goal was the migration of our system from Gatsby Cloud to Netlify. The automatic production build and feature-branch builds as well as other functions such as the Slack and Github notifications prove Netlify to be an easy-usable service provider. The transfer to Netlify worked, but only with the help of the Netlify support desk.

However, our project consisting of approximately 37k static sites and a full build time of around 33 minutes pushed us out of the limits of the Netlify Pro Plan. As a proposed Enterprise Plan exceeded our budget, we came up with our own deployment infrastructure using Github Actions and Digital Ocean. And in the end we were glad we did, as this solution beats the alternatives in performance, maintainability, scalability, transparency and pricing.

Requirements

Automatic Production and Development Build + Deployment

Preview for Feature-Branches

Differentiation between full and incremental build

Using a Content Delivery Network (CDN) compatible with previously used AWS Cloudfront

Approach

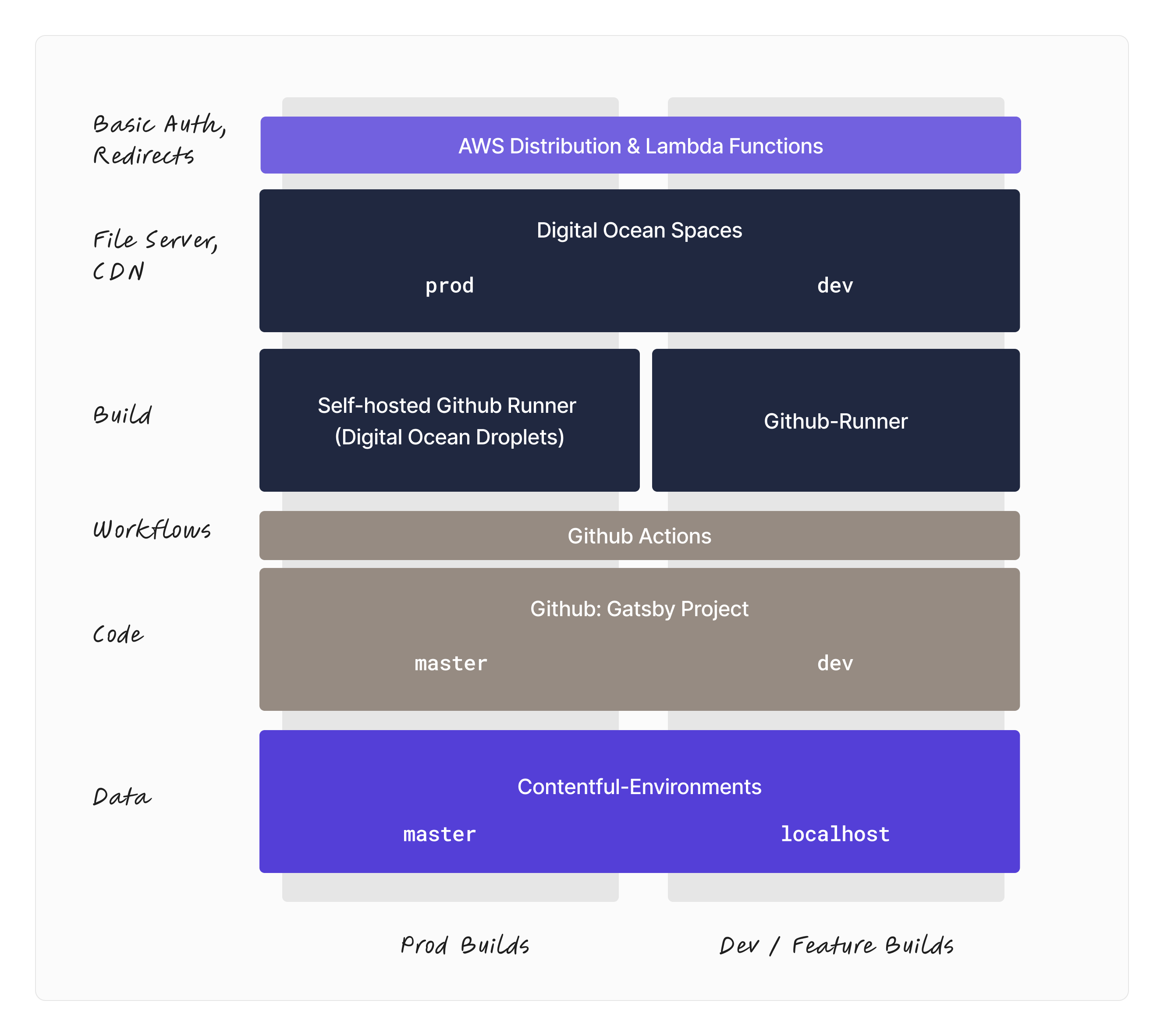

We decided on serving the files using Digital Ocean Spaces. These object storages are S3 compatible and include a built-in CDN. Aligning with our requirements, one space is used for production and one for development and feature branches. We support feature branches by facilitating a folder structure based on the name of the branch. Hence, only one space is occupied for development purposes.

To optimize build time and alleviate the load on GitHub, the production builds implement a self-hosted GitHub Runner infrastructure. Self-hosted GitHub Runners work by executing a piece of GitHub software on a designated server, enabling GitHub workflows to utilize this server as a dedicated runner. The advantages of utilizing self-hosted GitHub Runners include cost-effectiveness and the ability to leverage more powerful machines. On the other hand, the setup process for self-hosted runners can be more complex compared to native runners, and they require self-responsibility for ongoing maintenance.

In our specific use case, these GitHub Runners are hosted on Digital Ocean Droplets and are used to build the project according to the parameter (full build or incremental build) and to sync the resulting files to the specific Digital Ocean Space.

As our development infrastructure is not dependent on high velocity and does require less storage space, development and feature branches keep relying on native Github runners.

The following chart shows the elements of the infrastructure separated between production builds and development or feature builds.

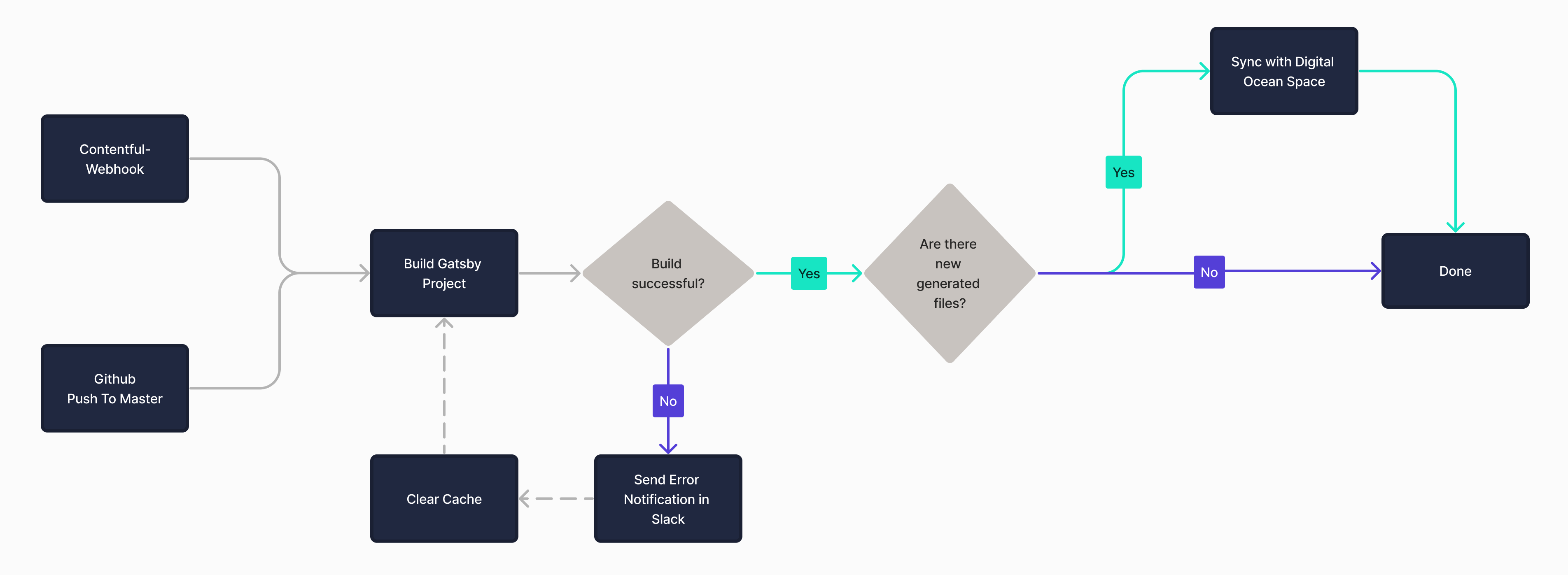

Moving on to the deployment process for production and development builds using Github Actions: They can be run manually or triggered automatically through a webhook by of the Content Management System (in this case Contentful).

If a full build is started the cache will be cleaned beforehand, otherwise an incremental build occurs. This clearly reduces the build time, as only the updates on the CMS are built. After the files are created, only new or changed files will be copied to the space to shorten the copying time.

After building and copying the files in the suitable space, a Slack notification (and a Github comment for pull requests) is send according to the status of the build.

Since Digital Ocean Spaces does not offer more complex webserver configuration, we used AWS Cloudfront and Lambdas for basic authentication on restricted pages and redirects from the path folder to the actual HTML page or to error pages.

Technical Foundation

Alright, now you have a high-level overview of the deployment pipeline. Let’s dive into the details of the heart of this whole pipeline: The GitHub action file running on our Digital-Ocean hosted runner.

We will first describe the details of the prod workflow and will discuss the difficulties and the differences in the dev workflow. We will also skip some parts of the workflow like deleting canceled workflows, notifications in slack and GitHub Pull Request comments, since these tasks are not necessary and only improve our internal process.

Prod Workflow

name: Build & Deploy Prod

on:

workflow_dispatch:

inputs:

full_build:

type: boolean

description: "Run full build"

required: true

default: false

push:

branches:

- master

repository_dispatch:

types: ["contentful-update", "automatic-build"]

# Will cancel pending jobs as soon as another one is added to the queue.

# Will not impact currently running jobs (cancel-in-progress: false)

concurrency:

group: prod-build

cancel-in-progress: falseAt first we define the triggers for this workflow.

“workflow_dispatch”: Manual trigger with the option of a full build for emergencies

“push” trigger for code-releases

“contentful-update”: Triggering by Contentful (CMS-System) via webhooks

“automatic-build”: Triggered twice nightly (one full build and one incremental build) to solve occasional issues we had with contentful data inconsistencies when only running incremental builds.

Now lets move on to the concurrency settings. Since Contentful uses autosave, the webhook is triggered quite often (on a busy day about every other minute) while an editor is editing. This results in a queue of builds which are triggered by this update events, but most of those events will not actually produce an output, since on the next build all updates (not only the once that triggered the event) will get fetched. Using concurrency we can cancel unnecessary builds and thus speed up the time to delivery as there are less builds that don’t produce output but block the build queue. However, the running builds must not be cancelled, as this would negatively affect the time to deliver the lastest contents and could lead to weird inconsistencies, when the build is canceled while uploading files to Digital Ocean Spaces.

jobs:

build:

name: Build

runs-on: prod-runner

outputs:

gatsby_build_result: ${{ steps.gatsby_build.outputs.gatsby_build_result }}

build_status: ${{ job.status }}

steps:

- uses: actions/checkout@v4

with:

clean: ${{ github.event_name == 'push' }}

- name: Install dependencies

run: yarn install --production

- name: Clean Project

if: ${{ github.event.inputs.full_build == 'true' || github.event.client_payload.full_build == 'true' }}

run: |

yarn clean

- name: Build Gatsby site

id: gatsby_build

env:

STAGE: prod

ENVIRONMENT: motorsport

run: |

rm -f output.log

# Write all console output to output.log-file while still getting standard print output

# forwarded to Github Action, see: https://askubuntu.com/a/161946

yarn build | tee output.log

# NOTE: the quotation marks in the echo-expression are needed to preserve line breaks

# Without the grep will result the whole GATSBY_OUTPUT as a match

export GATSBY_BUILD_RESULT=$(grep -m 1 "There are no new or changed html files to build" output.log)

# Forward to Github jobs context

echo "gatsby_build_result=$GATSBY_BUILD_RESULT" >> "$GITHUB_OUTPUT"

echo "Build result: ✨ ${GATSBY_BUILD_RESULT:-"There are new or changed html files. Deployment pending."}"

- name: Copy to Digital Ocean Spaces

if: ${{ !contains(steps.gatsby_build.outputs.gatsby_build_result, 'There are no new or changed html files to build') }}

run: rclone sync public rsync-config:do-space --size-only

Now the really interesting part. The first job in the workflow: The actual gatsby build. Lets have a look at the most important things. First of all: runs-on: prod-runner defines on which github-runner the workflow should be run. The value there is the label of the runner and in our case the label of our self-hosted runner.

As the workflow is run on a custom Github Runner, we can skip a lot of steps you would normally require in a Github Action. This massively speeds up the build, as e.g., actions don’t need to be loaded and install steps can be skipped.

As first action, we check out our code repository. But only if the build is triggered by an update on master, a clean checkout and build is triggered. This ensures that the code changes that triggered the update will be reflected on all pages. ”yarn clean” is only run if a full build is triggered and deletes all the Gatsby cache and public html files before.

“yarn install —production” installs dependencies. Using the production flag, the dev dependencies are skipped to save time and space.

Now we get to a little bit of magic. On a busy day, a lot of small contenful-updates will get triggered, where no new html content is generated (even though Gatsby might re-generate some files). Since the upload to Digital Ocean takes quite a lot of time, we only want to do this when necessary. So we do the “yarn build”, and save the output into a log file. If we find gatsby printing, that “there are no new or changed html files to build”, we skip the upload step.

As last build step we upload the files to Digital Ocean. We use rclone to sync our remote bucket with the build output (you need to configure your rclone config and specify the specific digital ocean space). The “—size-only" flag speeds up this process. Gatsby touches a lot of files even though their content does not get changed. Using the flag we can update only the files actually updated and skip the files that were not changed.

Differences to Dev Workflows

The dev builds follow the same general structure as the prod builds, but differ in core parts, due to the usage of native Github Runners instead of a dedicated customized build agent. Let's wrap them up:

Native Github Runner: We decided on using the native Github Runner as we have fewer requirements for dev builds in terms of build performance and -frequency. This type of build is used far less. Therefore, the runner does not have to run permanently. Native runners don't require complex start-up and shutdown logic.

Different triggers: Since we don’t rely on CMS updates, there are no automatic triggers. Instead, we focus on the development process and react to updates to the main development branch, feature branches, and pull requests.

Remove Deployment: We don’t want feature branches to persist, so we have another workflow deleting the files on Digital Ocean for a branch if its pull request is closed.

Concurrency: In contrast to prod, we also cancel running builds, since the latest code-state is the most relevant (e.g. two commits right after each other, we only want the second one to build).

Path Prefix: Since we decided to use a single Digital Ocean Space to host all feature-branches, we store the files for one feature deployment in a subfolder on the dev-space. Hence, the deployment is accessible in the browser through a sub-route. To make the Gatsby build compatible with this deployment, we use the Gatsby build option to prefix paths.

Summary

In conclusion, it was possible to create a standalone deployment pipeline for a Gatsby project independent from Netlify or any other Cloud provider. This resulted in a build pipeline that can hold up with the performance, and stability of their Enterprise offers. Similar features such as different types of builds and notifications were implemented additionally. The statistics below illustrate the hard facts we improved with this migration. We reduced the full build time by 40%, halved the monthly Price and so far (with the new CD pipeline running for appr. 1 month) we have not experienced any build failures. With Gatsby Cloud, we had approximately 10 failing builds per month due to timeouts, exceeding memory, and Gatsby-Cloud issues.

- Pricing

- 53$/month

- Full Build Time

- 20 min

- Incremental Build Time

- 3-4 min

- Error Rate

- none (1 month running)